Dual-source deep neural networks for human pose estimation Xiaochuan Fan, Kang Zheng, Yuewei Lin, Song Wang CVPR 2015

Idea

Most of the previous works on human pose estimation are based on the

two-layer part-based model. The first layer focuses on local (body) part

appearance and the second layer imposes the contextual relations between

local parts. These pose estimation methods using part-based models are usually

sensitive to noise and the graphical model lacks expressiveness to model

complex human poses. Furthermore, most of these methods search for each

local part independently and the local appearance may not be sufficiently

discriminative for identifying each local part reliably.

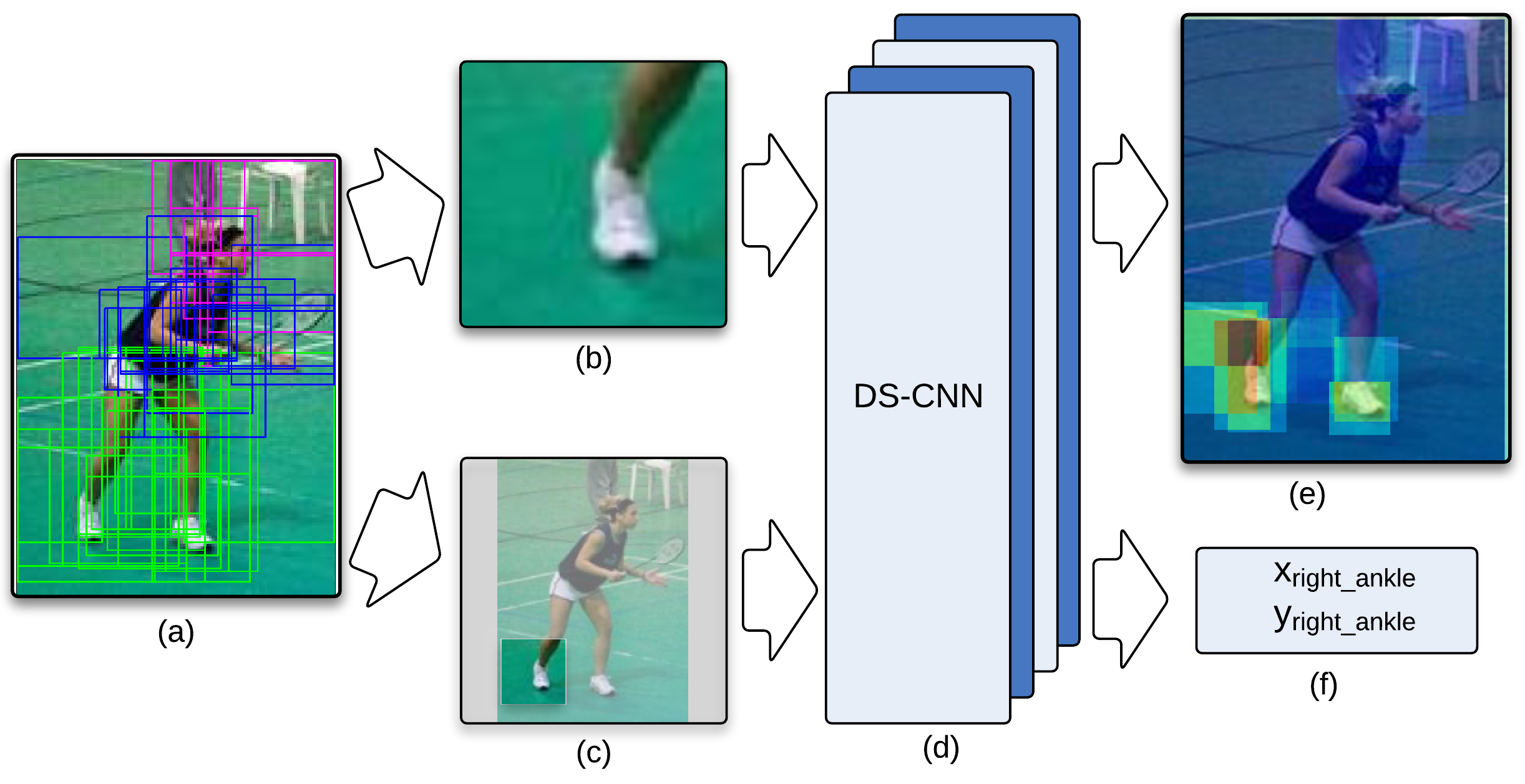

In this work, we propose a dual-source CNN (DS-CNN) based method

for human pose estimation without using any explicit graphical model. This proposed method

utilizes both the local part appearance in image patches (part patch) and the holistic

view of the full human body (body patch) for more accurate human pose estimation.

By adding a binary mask to the body patch, we novelly integrate global and local information to one input (body patch). By

taking both the local patch and the body patch as inputs, the proposed DS-CNN performs a unified learning to achieve

both joint detection, which determines whether an object proposal contains

a body joint, and joint localization, which finds the exact location of the

joint in the object proposal.

Paper

About Performance

In the paper, we evaluated our work using the PCP metric which considers a part prediction correct if its endpoints are closer to their ground-truth locations than half the length of the limb on average over the two endpoints. Moreover, multiple candidate predictions from models with different input dimensions were also used to match the ground-truth. However, some previous methods were evaluated using single candidate and stricter PCP. In order to compare our work with state-of-the-art methods fairly, we provide predictions and the comparison of strict PCP results and PDJ results using single candidate on the Leeds Sport Pose (LSP) dataset with Person-Centric (PC) annotations. Thank Pishchulin et al. for their evaluation framework.Strict PCP (our predictions)

| Method | Torso | Upper leg | Lower leg | Upper arm | Forearm | Head | Full body |

|---|---|---|---|---|---|---|---|

| Wang&Li, CVPR'13 | 87.5 | 56.0 | 55.8 | 43.1 | 32.1 | 79.1 | 54.1 |

| Pishchulin et al., ICCV'13 | 88.7 | 63.6 | 58.4 | 46.0 | 35.2 | 85.1 | 58.0 |

| Tompson et al., NIPS'14 | 90.3 | 70.4 | 61.1 | 63.0 | 51.2 | 83.7 | 66.6 |

| Chen&Yuille, NIPS'14 | 96.0 | 77.2 | 72.2 | 69.7 | 58.1 | 85.6 | 73.6 |

| Ours | 95.4 | 77.7 | 69.8 | 62.8 | 49.1 | 86.6 | 70.1 |

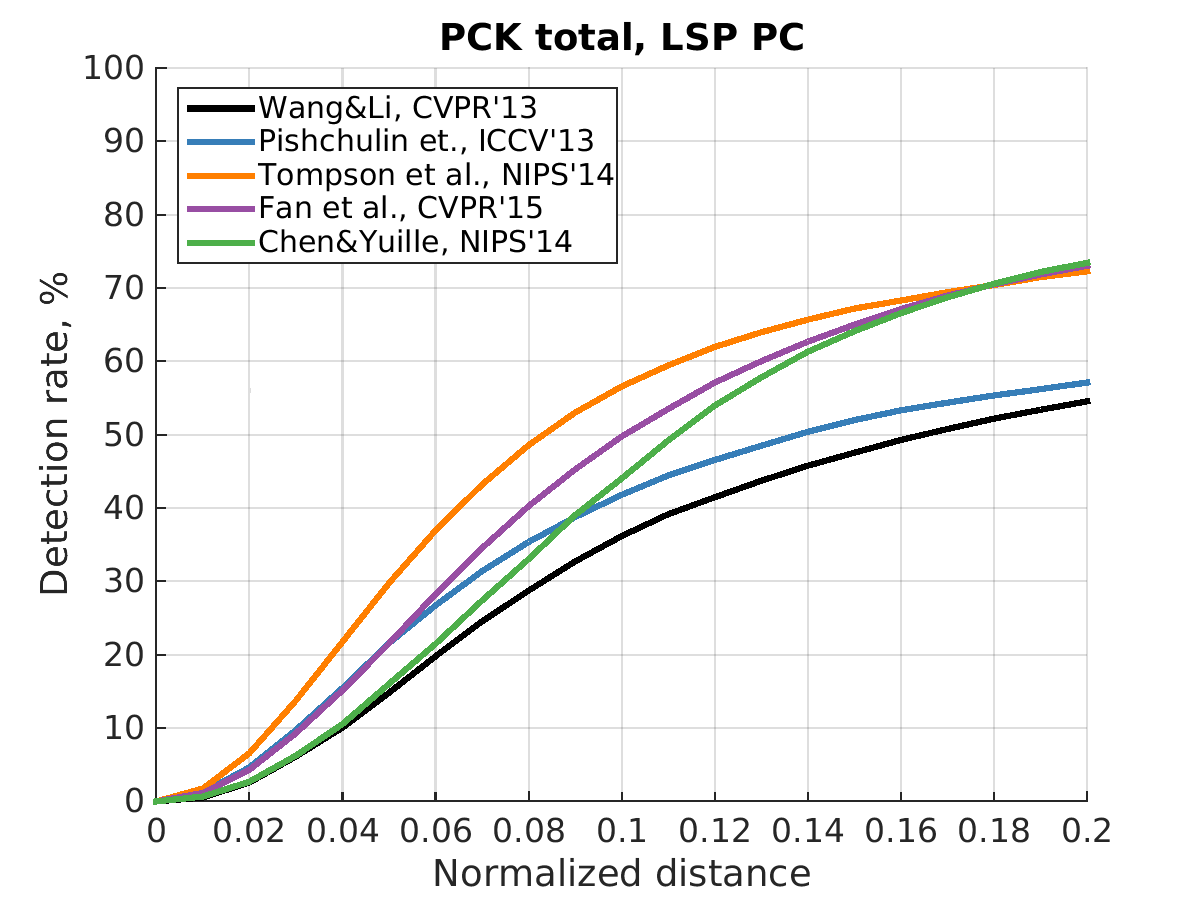

PDJ curve

PDJ value (normalized distance equal to 0.2) (our predictions)

| Method | Head | Shoulder | Elbow | Wrist | Hip | Knee | Ankle | Full body |

|---|---|---|---|---|---|---|---|---|

| Wang&Li, CVPR'13 | 84.7 | 57.1 | 43.7 | 36.7 | 56.7 | 52.4 | 50.8 | 54.6 |

| Pishchulin et al. | 87.2 | 56.7 | 46.7 | 38.0 | 61.0 | 57.5 | 52.7 | 57.1 |

| Tompson et al., NIPS'14 | 90.6 | 79.2 | 67.9 | 63.4 | 69.5 | 71.0 | 64.2 | 72.0 |

| Chen&Yuille, NIPS'14 | 91.8 | 78.2 | 71.8 | 65.5 | 73.3 | 70.2 | 63.4 | 73.4 |

| Ours | 92.4 | 75.2 | 65.3 | 64.0 | 75.7 | 68.3 | 70.4 | 73.0 |

Code

[DS-CNN data layer] [test code] [trained model]